The main purpose of an ETL tool is to load and transform data from one system into another. The tool performs this task using minimal resources and must be highly performant. The load process is usually performed on a database. To make the process more efficient, indexes and constraints can be disabled. An ETL tool must also maintain referential integrity. Therefore, it is vital to know how to optimize a data load process to ensure referential integrity.

Referential integrity

The ETL tool is used to validate data for referential integrity. It checks whether all foreign keys in a database match the primary key in its parent table. For example, in the Northwind database, one table contains store sales information, and another contains information on order details. For a sale to occur, the buyer must have a certain order number. The referential integrity constraint ensures that all columns in the parent table have a unique reference to a particular order.

Referential integrity is the ability of two tables to remain consistent despite a data change. This refers to the completeness of data in two tables. The principle of referential integrity is best explained through the concept of parent/child relationships. A value from the child table must exist in the parent table to be considered valid. A table containing a value that does not exist in the parent table does not have referential integrity and therefore represents an orphan. There are many reasons for this.

Migration of unstructured data

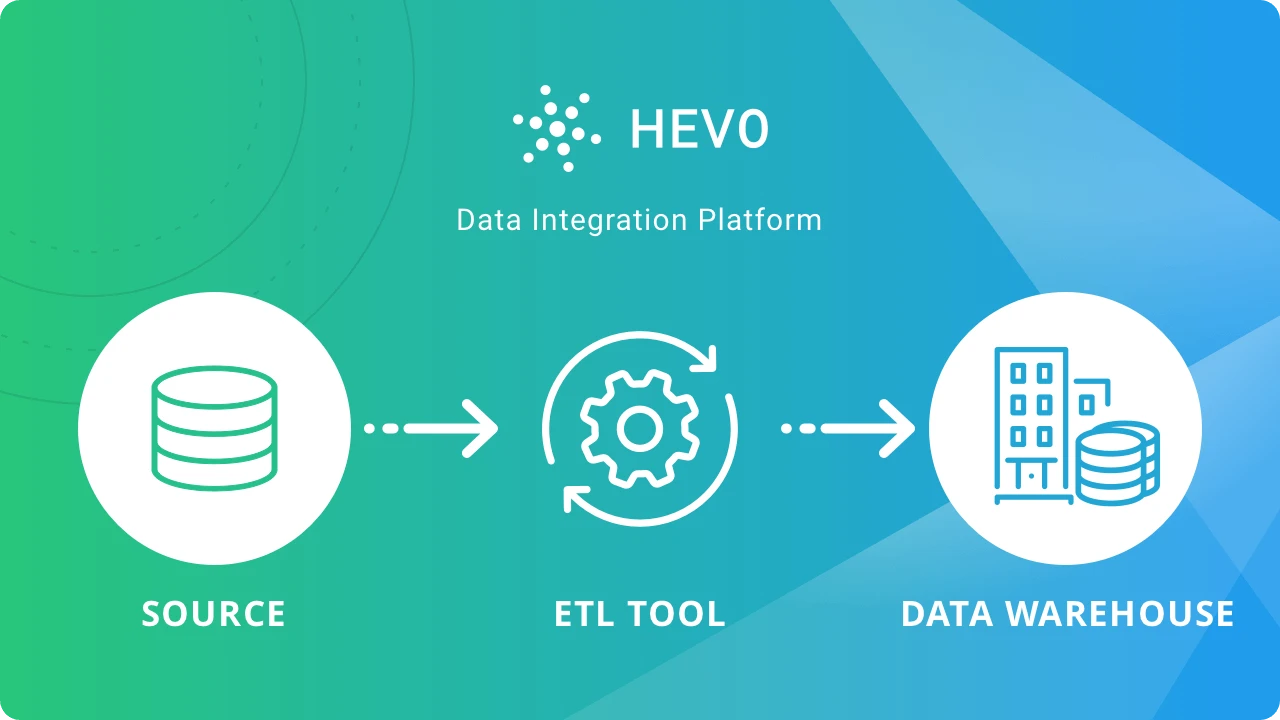

In this day and age, ETL tools are essential to data management. They help you migrate data from one source to another. While the main purpose of ETL tools is the migration of structured data to a new storage format, they are also increasingly used in reverse ETL. The reverse ETL process sends cleaned data from a data warehouse back into a business application. But, how do ETL tools work? What are the different types of ETL tools available?

ETL is a process that combines data collection, transformation, and loading. It gathers data from different sources, ranging in volume, type, and reliability. The target data store, in turn, may be a database, warehouse, or data lake. The ETL tool identifies the data sources, copies them, and moves them to the target datastore. This can include both unstructured and structured data.

Real-time data integration

Real-time data integration is essential for any business, as it can improve decision-making, break down information silos, and streamline processes. For example, when you book a vacation, the system will update the master database. Likewise, POS terminals use the same technology to update your account when you enter your PIN or withdraw money. Real-time data integration begins with change data capture or capturing and transmitting changes in a source system.

ETL tools are a critical component in the development of business intelligence. These tools allow data from various sources to be loaded into different destinations. Data integration, which is crucial for real-time decisions, is accomplished through three steps. First, data is extracted from its source and cleaned. Next, it is transformed and loaded into the intended destination. Finally, it is loaded into the target location, such as a database tool or business intelligence application.

Data cleansing

Data cleansing is a necessary process, especially when dealing with large amounts of data. Data scientists understand that data quality directly affects revenue and must strive to minimize errors in their work. Without regular data cleansing, mistakes can accumulate and decrease efficiency. Fortunately, there are a variety of solutions to this problem. These solutions vary in price, but all share the same main purpose: to cleanse data. Listed below are a few of the most popular solutions for data cleansing.

Data cleansing reduces the risk of error and enhances the reliability of the data. There are five steps involved in data cleansing. The process starts with strategy, which involves understanding the data and planning its use. It is important to remember that this step is not the same for every dataset. The purpose of the strategy is to make data cleansing as efficient as possible. Data cleansing should also be automated, enabling you to automate as much of the process as possible.